Bhakshali Algorithm

This method for finding an approximation to a square root or square root reciprocal was described in an ancient Indian mathematical manuscript. This algorithm is quartically convergent. The iterative equations are

![]()

![]()

![]()

The variable ![]() approaches zero and

approaches zero and ![]() holds the value of square root.

holds the value of square root.

Two variable iterative method

This method is used to find square root of number 1<X<3. However the range of ![]() can be increased. This method converges quadratically. The equations are

can be increased. This method converges quadratically. The equations are

![]()

![]()

Initially ![]() and

and ![]() . Finally the variable

. Finally the variable ![]() holds the final value of

holds the final value of ![]() .

.

Halley’s method

Halley’s method is actually the Householder’s method of order two. The equation which governs this algorithm is

![]()

![]()

At the final step, ![]() holds the value of square root reciprocal of

holds the value of square root reciprocal of ![]() and

and ![]() converges to 1. This method converges cubically but involves four multiplications per iteration.

converges to 1. This method converges cubically but involves four multiplications per iteration.

Newton Raphson Iteration

Newton-Raphson’s iterative algorithm is a very popular method to approximate a given function. It can be used to compute square root or square root reciprocal of a given number. The Newton-Raphson’s iterative equation is

![]()

where ![]() is the derivative of

is the derivative of ![]() . The function which is used to compute the square root reciprocal of a number is

. The function which is used to compute the square root reciprocal of a number is ![]() , where X is the input operand. The Newton-Raphson iteration gives

, where X is the input operand. The Newton-Raphson iteration gives

![]()

Here ![]() is taken as close approximation of

is taken as close approximation of ![]() . After some finite iterations the above equation converges to the square root reciprocal of X. It is very obvious that the value of

. After some finite iterations the above equation converges to the square root reciprocal of X. It is very obvious that the value of ![]() must be chosen care fully to converge.

must be chosen care fully to converge.

The square root of ![]() can be computed by multiplying the final output

can be computed by multiplying the final output ![]() by

by ![]() or directly iterating for square root. The function

or directly iterating for square root. The function ![]() can be used in this case. Then the iterative equation will be

can be used in this case. Then the iterative equation will be

![]()

Similar to the previous algorithms here also ![]() takes the closest approximation of

takes the closest approximation of ![]() . One of the disadvantage of direct computation of square root by Newton’s theorem is that it involves a division operation which is much complex than a multiplication operation.

. One of the disadvantage of direct computation of square root by Newton’s theorem is that it involves a division operation which is much complex than a multiplication operation.

Gold-Schmidt Algorithm

The Gold-Schmidt algorithm is one of the popular fast iterative methods. This algorithm can be used to compute division, square root or square root reciprocal. The basic version of the Gold-Schmidt algorithm to compute square root reciprocal of the radicand X is governed by the following equations. All these equations are sequentially executed in a iteration.

![]()

![]()

![]()

Initially ![]() ,

, ![]() = a rough estimate of

= a rough estimate of ![]() and

and ![]() . The initial guess of

. The initial guess of ![]() can be done by selecting a close value from a predefined LUT. The algorithm runs until

can be done by selecting a close value from a predefined LUT. The algorithm runs until ![]() converges to 1 or for a fixed number of iterations. Finally square root reciprocal is computed as,

converges to 1 or for a fixed number of iterations. Finally square root reciprocal is computed as, ![]() . Note that this algorithm can be used to compute both square root and its reciprocal. The square root function can be computed by multiplying the final value of

. Note that this algorithm can be used to compute both square root and its reciprocal. The square root function can be computed by multiplying the final value of ![]() by

by ![]() as

as ![]() . This algorithm involves three multiplications and one subtraction per iteration to compute

. This algorithm involves three multiplications and one subtraction per iteration to compute ![]() . Square root can be computed by another multiplication at the end.

. Square root can be computed by another multiplication at the end.

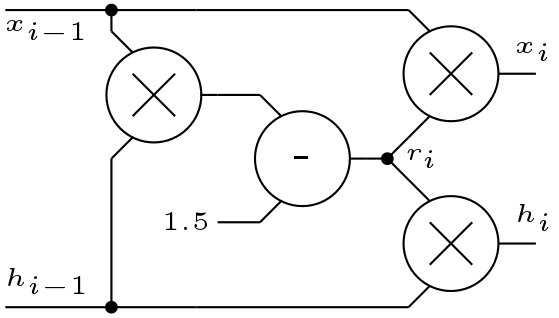

Another version of Gold-Schmidt algorithm is shown below

![]()

![]()

![]()

Here initially ![]() equals to the closest approximation of the function

equals to the closest approximation of the function ![]() ,

, ![]() and

and ![]() . At the end of the iterations

. At the end of the iterations ![]() converges to

converges to ![]() and

and ![]() converges to

converges to ![]() . This algorithms runs until

. This algorithms runs until ![]() reaches close to 0 or for fixed number of iterations. This improved version of Gold-Schmidt algorithm is faster than its previous version as at output stage no multiplier is needed to compute the square root function. But number of arithmetic operations per iteration remains same.

reaches close to 0 or for fixed number of iterations. This improved version of Gold-Schmidt algorithm is faster than its previous version as at output stage no multiplier is needed to compute the square root function. But number of arithmetic operations per iteration remains same.

Choice of Algorithm

The algorithms discussed above computes square root and its reciprocal by sequential execution of some equations until stopping criteria is reached or for a fixed number of iterations. In software application, the choice of algorithm is not so crucial but in hardware implementation the algorithm must be chosen carefully. Following are the criteria to select an algorithm.

- Resources: Implementation of the algorithm must be hardware efficient. (Number of multiplication and addition per iteration)

- Iteration Count: Iterative algorithms mentioned above are meant to be faster, so the chosen method should produce an acceptable estimate after minimum number of iterations.

- Error: The acceptable amount of error is an important metric in choosing an algorithm.

- Initial Guess: Technique to choose the initial value should be simple otherwise extra storage is wasted.

Two methods among the above mentioned algorithms, Newton-Raphson and Gold-Schmidt Algorithm are popularly used. In this tutorial, we have chosen the Gold-Schmidt Algorithm (version 2) for implementation. The implementation of Gold-Schmidt Algorithm is discussed below.